Flux

Related Docs:

class Flux

| package publisher

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

The common base type of the source sequences

The produced output after transformation by the given combinator

The list of upstream Publisher to subscribe to.

demand produced to each combined source Publisher

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

The common base type of the source sequences

The produced output after transformation by the given combinator

The list of upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

type of the value from source5

The produced output after transformation by the given combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

The fifth upstream Publisher to subscribe to.

The sixth upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

type of the value from source5

The produced output after transformation by the given combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

The fifth upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

The produced output after transformation by the given combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from source1

type of the value from source2

type of the value from source3

The produced output after transformation by the given combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from source1

type of the value from source2

The produced output after transformation by the given combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a Flux based on the produced value

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from sources

The produced output after transformation by the given combinator

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

demand produced to each combined source Publisher

The upstreams Publisher to subscribe to.

a Flux based on the produced combinations

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

Build a Flux whose data are generated by the combination of the most recent published values from all publishers.

type of the value from sources

The produced output after transformation by the given combinator

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

The upstreams Publisher to subscribe to.

a Flux based on the produced combinations

Concat all sources pulled from the given Publisher array.

Concat all sources pulled from the given Publisher array. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher.

The source type of the data sequence

The array of Publisher to concat

a new Flux concatenating all source sequences

Concat all sources emitted as an onNext signal from a parent Publisher.

Concat all sources emitted as an onNext signal from a parent Publisher. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher which will stop listening if the main sequence has also completed.

The source type of the data sequence

The Publisher of Publisher to concat

the inner source request size

a new Flux concatenating all inner sources sequences until complete or error

Concat all sources emitted as an onNext signal from a parent Publisher.

Concat all sources emitted as an onNext signal from a parent Publisher. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher which will stop listening if the main sequence has also completed.

The source type of the data sequence

The Publisher of Publisher to concat

a new Flux concatenating all inner sources sequences until complete or error

Concat all sources pulled from the supplied Iterator on Publisher.subscribe from the passed Iterable until Iterator.hasNext returns false.

Concat all sources pulled from the supplied Iterator on Publisher.subscribe from the passed Iterable until Iterator.hasNext returns false. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher.

The source type of the data sequence

The Publisher of Publisher to concat

a new Flux concatenating all source sequences

Concat all sources pulled from the given Publisher array.

Concat all sources pulled from the given Publisher array. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher. Any error will be delayed until all sources have been concatenated.

The source type of the data sequence

The Publisher of Publisher to concat

a new Flux concatenating all source sequences

Concat all sources emitted as an onNext signal from a parent Publisher.

Concat all sources emitted as an onNext signal from a parent Publisher. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher which will stop listening if the main sequence has also completed.

Errors will be delayed after the current concat backlog if delayUntilEnd is false or after all sources if delayUntilEnd is true.

The source type of the data sequence

The Publisher of Publisher to concat

delay error until all sources have been consumed instead of after the current source

the inner source request size

a new Flux concatenating all inner sources sequences until complete or error

Concat all sources emitted as an onNext signal from a parent Publisher.

Concat all sources emitted as an onNext signal from a parent Publisher. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher which will stop listening if the main sequence has also completed.

The source type of the data sequence

The Publisher of Publisher to concat

the inner source request size

a new Flux concatenating all inner sources sequences until complete or error

Concat all sources emitted as an onNext signal from a parent Publisher.

Concat all sources emitted as an onNext signal from a parent Publisher. A complete signal from each source will delimit the individual sequences and will be eventually passed to the returned Publisher which will stop listening if the main sequence has also completed.

The source type of the data sequence

The Publisher of Publisher to concat

a new Flux concatenating all inner sources sequences until complete or error

Creates a Flux with multi-emission capabilities (synchronous or asynchronous) through the FluxSink API.

Creates a Flux with multi-emission capabilities (synchronous or asynchronous) through the FluxSink API.

This Flux factory is useful if one wants to adapt some other a multi-valued async API and not worry about cancellation and backpressure. For example:

Flux.create[String](emitter => {

ActionListener al = e => {

emitter.next(textField.getText())

}

// without cancellation support:

button.addActionListener(al)

// with cancellation support:

button.addActionListener(al)

emitter.setCancellation(() => {

button.removeListener(al)

})

}, FluxSink.OverflowStrategy.LATEST);

the value type

the consumer that will receive a FluxSink for each individual Subscriber.

the backpressure mode, see { @link OverflowStrategy} for the available backpressure modes

a Flux

Creates a Flux with multi-emission capabilities (synchronous or asynchronous) through the FluxSink API.

Creates a Flux with multi-emission capabilities (synchronous or asynchronous) through the FluxSink API.

This Flux factory is useful if one wants to adapt some other a multi-valued async API and not worry about cancellation and backpressure. For example:

Handles backpressure by buffering all signals if the downstream can't keep up.

Flux.String>create(emitter -> {

ActionListener al = e -> {

emitter.next(textField.getText());

};

// without cancellation support:

button.addActionListener(al);

// with cancellation support:

button.addActionListener(al);

emitter.setCancellation(() -> {

button.removeListener(al);

});

});

the value type

the consumer that will receive a FluxSink for each individual Subscriber.

a Flux

Supply a Publisher everytime subscribe is called on the returned flux.

Supply a Publisher everytime subscribe is called on the returned flux. The passed scala.Function1[Unit,Publisher[T]] will be invoked and it's up to the developer to choose to return a new instance of a Publisher or reuse one effectively behaving like Flux.from

Create a Flux that completes without emitting any item.

Build a Flux that will only emit an error signal to any new subscriber.

Create a Flux that completes with the specified error.

Select the fastest source who won the "ambiguous" race and emitted first onNext or onComplete or onError

Select the fastest source who won the "ambiguous" race and emitted first onNext or onComplete or onError

The source type of the data sequence

The competing source publishers

a new Flux} eventually subscribed to one of the sources or empty

Select the fastest source who emitted first onNext or onComplete or onError

Select the fastest source who emitted first onNext or onComplete or onError

The source type of the data sequence

The competing source publishers

a new Flux} eventually subscribed to one of the sources or empty

Expose the specified Publisher with the Flux API.

Create a Flux that emits the items contained in the provided scala.Array.

Create a Flux that emits the items contained in the provided Iterable.

Create a Flux that emits the items contained in the provided Stream.

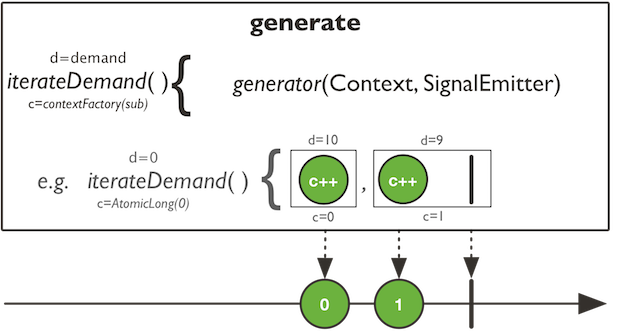

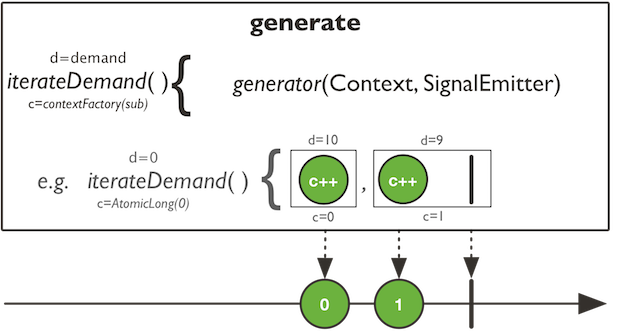

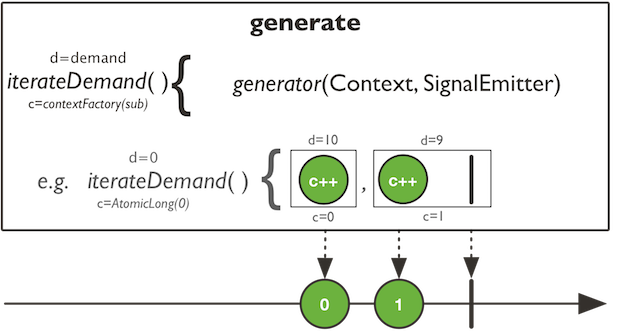

Generate signals one-by-one via a function callback.

Generate signals one-by-one via a function callback.

the value type emitted

the custom state per subscriber

called for each incoming Supplier to provide the initial state for the generator bifunction

the bifunction called with the current state, the SynchronousSink API instance and is expected to return a (new) state.

called after the generator has terminated or the downstream cancelled, receiving the last state to be handled (i.e., release resources or do other cleanup).

a Reactive Flux publisher ready to be subscribed

Generate signals one-by-one via a function callback.

Generate signals one-by-one via a function callback.

the value type emitted

the custom state per subscriber

called for each incoming Supplier to provide the initial state for the generator bifunction

the bifunction called with the current state, the SynchronousSink API instance and is expected to return a (new) state.

a Reactive Flux publisher ready to be subscribed

Generate signals one-by-one via a consumer callback.

Generate signals one-by-one via a consumer callback.

the value type emitted

the consumer called with the SynchronousSink API instance

a Reactive Flux publisher ready to be subscribed

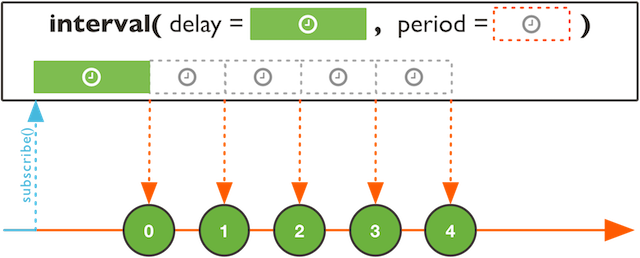

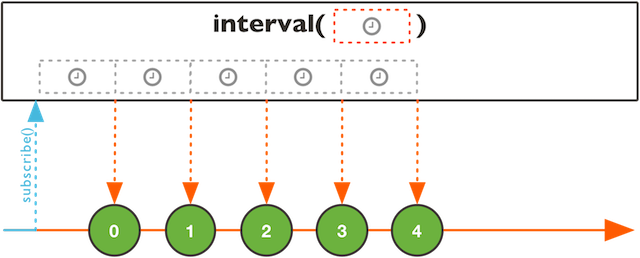

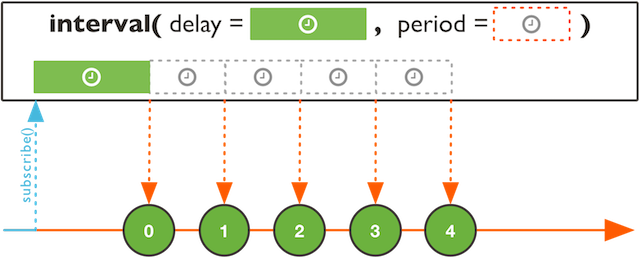

Create a new Flux that emits an ever incrementing long starting with 0 every N period of time unit on the given timer.

Create a new Flux that emits an ever incrementing long starting with 0 every N period of time unit on the given timer. If demand is not produced in time, an onError will be signalled. The Flux will never complete.

the timespan in milliseconds to wait before emitting 0l

the period in milliseconds before each following increment

the Scheduler to schedule on

a new timed Flux

Create a new Flux that emits an ever incrementing long starting with 0 every N milliseconds on the given timer.

Create a new Flux that emits an ever incrementing long starting with 0 every N milliseconds on the given timer. If demand is not produced in time, an onError will be signalled. The Flux will never complete.

The duration in milliseconds to wait before the next increment

a Scheduler instance

a new timed Flux

Create a new Flux that emits an ever incrementing long starting with 0 every N period of time unit on a global timer.

Create a new Flux that emits an ever incrementing long starting with 0 every N period of time unit on a global timer. If demand is not produced in time, an onError will be signalled. The Flux will never complete.

the delay to wait before emitting 0l

the period before each following increment

a new timed Flux

Create a new Flux that emits an ever incrementing long starting with 0 every period on the global timer.

Create a new Flux that emits the specified items and then complete.

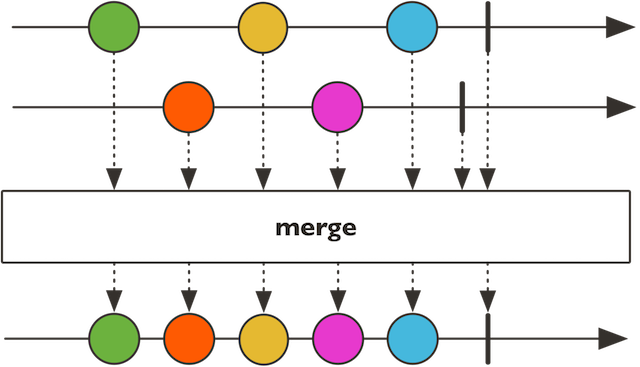

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

The source type of the data sequence

the inner source request size

the Publisher array to iterate on Publisher.subscribe

a fresh Reactive Flux publisher ready to be subscribed

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

The source type of the data sequence

the Publisher array to iterate on Publisher.subscribe

a fresh Reactive Flux publisher ready to be subscribed

Merge emitted Publisher sequences from the passed Publisher into an interleaved merged sequence.

Merge emitted Publisher sequences from the passed Publisher into an interleaved merged sequence. Iterable.iterator() will be called for each Publisher.subscribe.

The source type of the data sequence

the scala.Iterable to lazily iterate on Publisher.subscribe

a fresh Reactive Flux publisher ready to be subscribed

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

the merged type

a Publisher of Publisher sequence to merge

the request produced to the main source thus limiting concurrent merge backlog

the inner source request size

a merged Flux

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

the merged type

a Publisher of Publisher sequence to merge

the request produced to the main source thus limiting concurrent merge backlog

a merged Flux

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an interleaved merged sequence.

the merged type

a Publisher of Publisher sequence to merge

a merged Flux

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

Merge emitted Publisher sequences from the passed Publisher array into an interleaved merged sequence.

The source type of the data sequence

the inner source request size

the Publisher array to iterate on Publisher.subscribe

a fresh Reactive Flux publisher ready to be subscribed

Merge Publisher sequences from an Iterable into an ordered merged sequence.

Merge Publisher sequences from an Iterable into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

an Iterable of Publisher sequences to merge

the request produced to the main source thus limiting concurrent merge backlog

the inner source request size

a merged Flux, subscribing early but keeping the original ordering

Merge Publisher sequences from an Iterable into an ordered merged sequence.

Merge Publisher sequences from an Iterable into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

an Iterable of Publisher sequences to merge

a merged Flux, subscribing early but keeping the original ordering

Merge a number of Publisher sequences into an ordered merged sequence.

Merge a number of Publisher sequences into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

the inner source request size

a number of Publisher sequences to merge

a merged Flux, subscribing early but keeping the original ordering

Merge a number of Publisher sequences into an ordered merged sequence.

Merge a number of Publisher sequences into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

a number of Publisher sequences to merge

a merged Flux

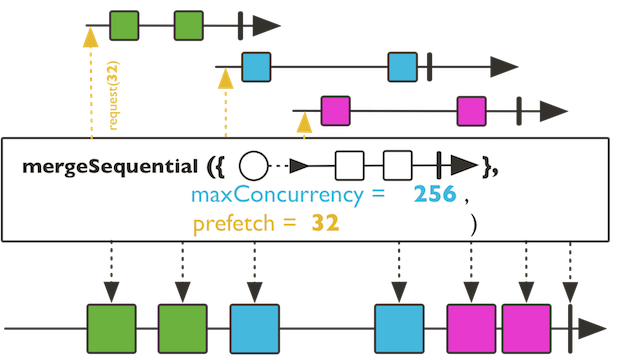

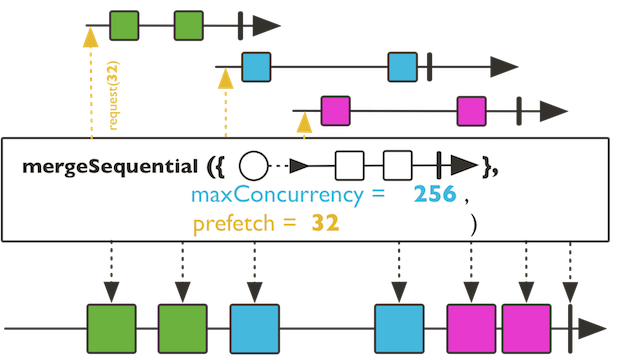

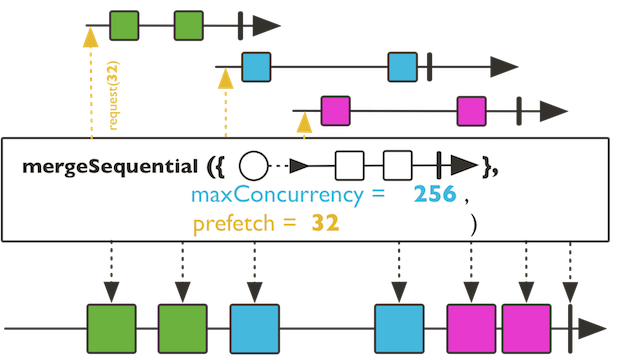

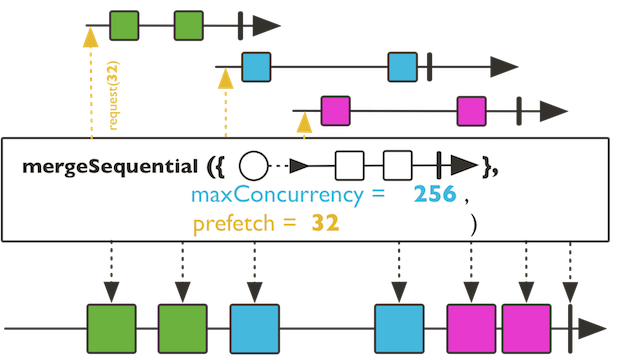

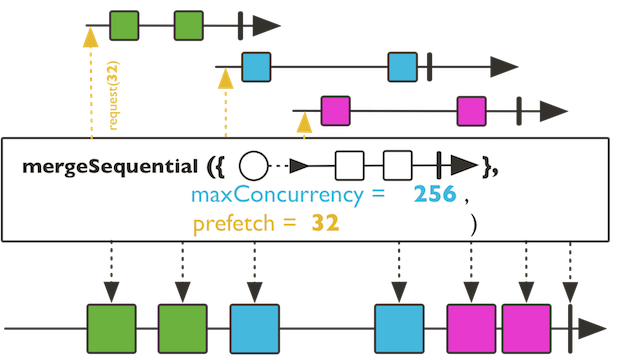

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

a Publisher of Publisher sequence to merge

the request produced to the main source thus limiting concurrent merge backlog

the inner source request size

a merged Flux

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

a Publisher of Publisher sequence to merge

a merged Flux

Merge Publisher sequences from an Iterable into an ordered merged sequence.

Merge Publisher sequences from an Iterable into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

an Iterable of Publisher sequences to merge

the request produced to the main source thus limiting concurrent merge backlog

the inner source request size

a merged Flux

Merge a number of Publisher sequences into an ordered merged sequence.

Merge a number of Publisher sequences into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

the merged type

the inner source request size

a number of Publisher sequences to merge

a merged Flux

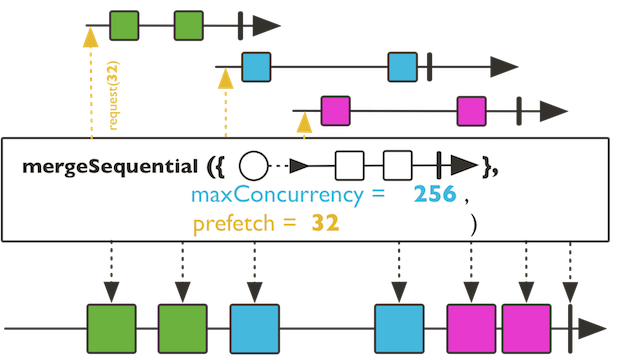

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence.

Merge emitted Publisher sequences by the passed Publisher into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order. This variant will delay any error until after the rest of the mergeSequential backlog has been processed.

the merged type

a Publisher of Publisher sequence to merge

the request produced to the main source thus limiting concurrent merge backlog

the inner source request size

a merged Flux, subscribing early but keeping the original ordering

Create a Flux that will never signal any data, error or completion signal.

Creates a Flux with multi-emission capabilities from a single threaded producer through the FluxSink API.

Creates a Flux with multi-emission capabilities from a single threaded producer through the FluxSink API.

This Flux factory is useful if one wants to adapt some other single-threaded multi-valued async API and not worry about cancellation and backpressure. For example:

Flux.push[String](emitter => {

val al: ActionListener = e => {

emitter.next(textField.getText())

};

// without cleanup support:

button.addActionListener(al)

// with cleanup support:

button.addActionListener(al)

emitter.onDispose(() => {

button.removeListener(al)

});

}, FluxSink.OverflowStrategy.LATEST)

the value type

the consumer that will receive a FluxSink for each individual Subscriber.

the backpressure mode, see OverflowStrategy for the available backpressure modes

a Flux

Creates a Flux with multi-emission capabilities from a single threaded producer through the FluxSink API.

Creates a Flux with multi-emission capabilities from a single threaded producer through the FluxSink API.

This Flux factory is useful if one wants to adapt some other single=threaded multi-valued async API and not worry about cancellation and backpressure. For example:

Flux.push[String](emitter => {

val al: ActionListener = e => {

emitter.next(textField.getText())

}

// without cleanup support:

button.addActionListener(al)

// with cleanup support:

button.addActionListener(al)

emitter.onDispose(() => {

button.removeListener(al)

})

}, FluxSink.OverflowStrategy.LATEST);

the value type

the consumer that will receive a FluxSink for each individual Subscriber.

a Flux

Build a Flux that will only emit a sequence of incrementing integer from start to start + count then complete.

Build a reactor.core.publisher.FluxProcessor whose data are emitted by the most recent emitted Publisher.

Build a reactor.core.publisher.FluxProcessor whose data are emitted by the most recent emitted Publisher. The Flux will complete once both the publishers source and the last switched to Publisher have completed.

the produced type

The { @link Publisher} of switching { @link Publisher} to subscribe to.

the inner source request size

a reactor.core.publisher.FluxProcessor accepting publishers and producing T

Build a reactor.core.publisher.FluxProcessor whose data are emitted by the most recent emitted Publisher.

Build a reactor.core.publisher.FluxProcessor whose data are emitted by the most recent emitted Publisher. The Flux will complete once both the publishers source and the last switched to Publisher have completed.

the produced type

The { @link Publisher} of switching Publisher to subscribe to.

a reactor.core.publisher.FluxProcessor accepting publishers and producing T

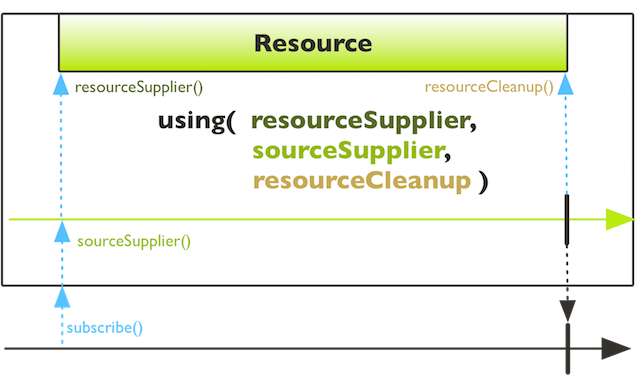

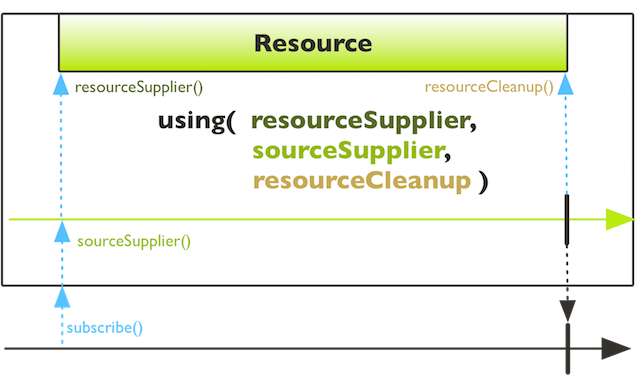

Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.

Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.

emitted type

resource type

a java.util.concurrent.Callable that is called on subscribe

a Publisher factory derived from the supplied resource

invoked on completion

true to clean before terminating downstream subscribers

new Stream

Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.

Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or the Subscriber cancels.

Eager resource cleanup happens just before the source termination and exceptions raised by the cleanup Consumer may override the terminal even.

emitted type

resource type

a java.util.concurrent.Callable that is called on subscribe

a Publisher factory derived from the supplied resource

invoked on completion

new Flux

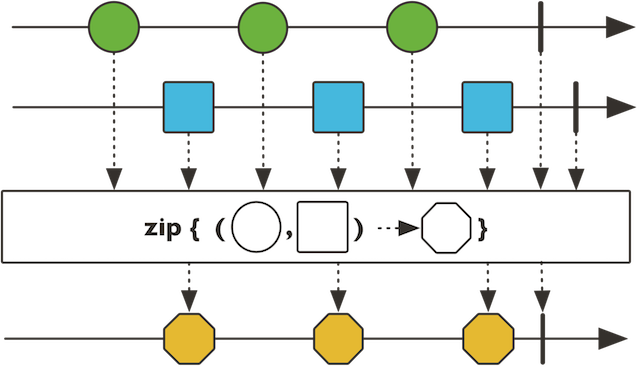

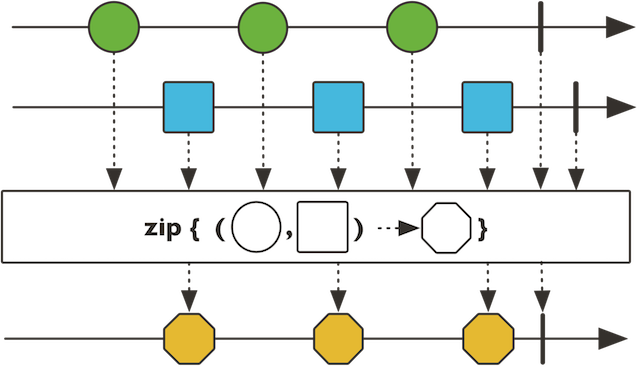

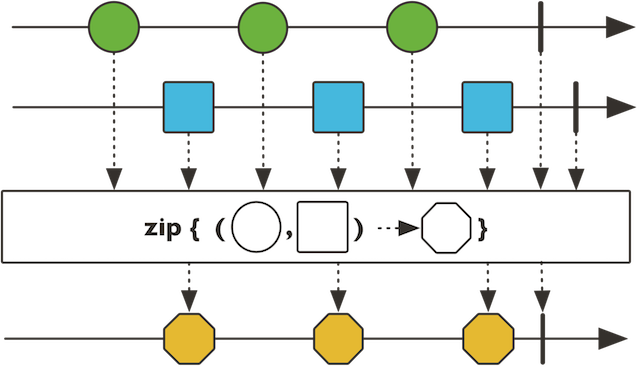

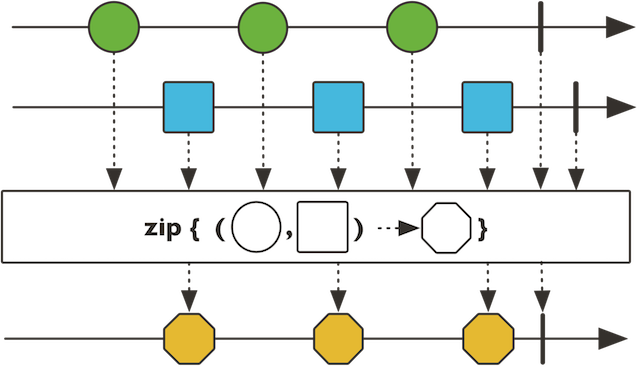

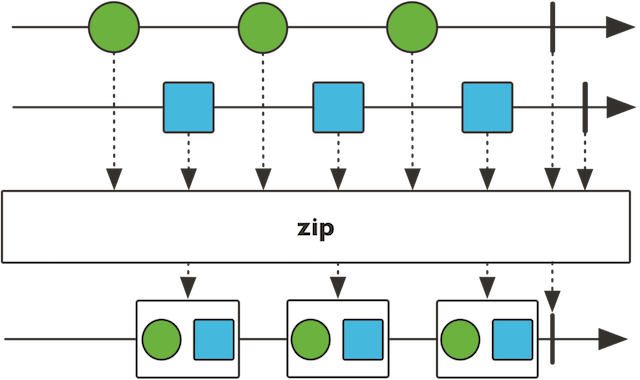

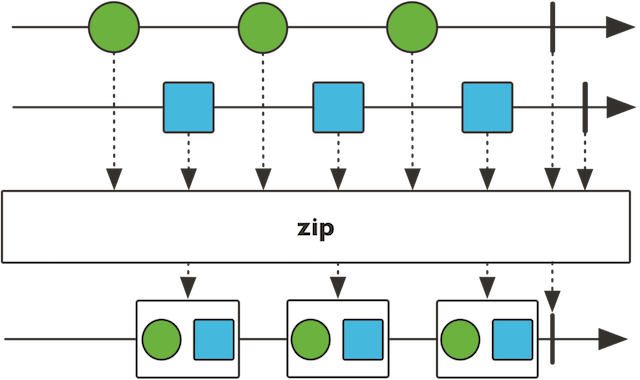

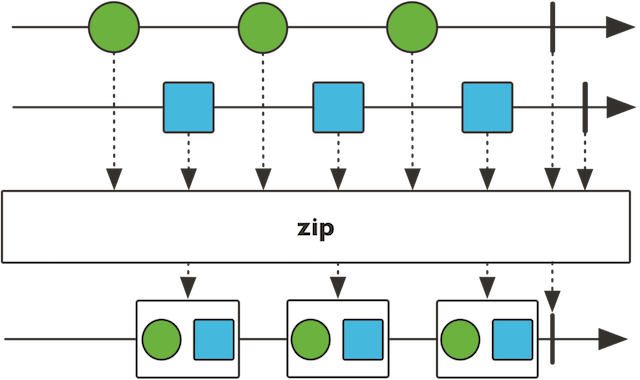

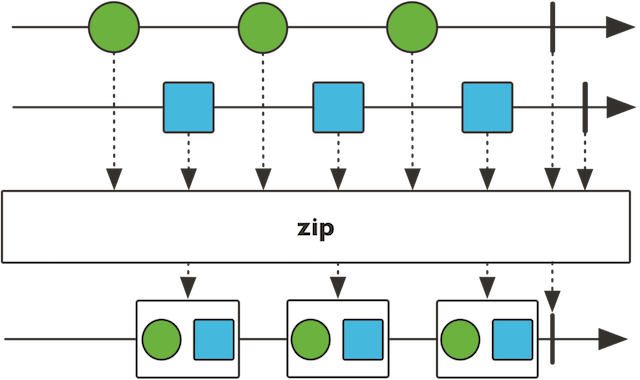

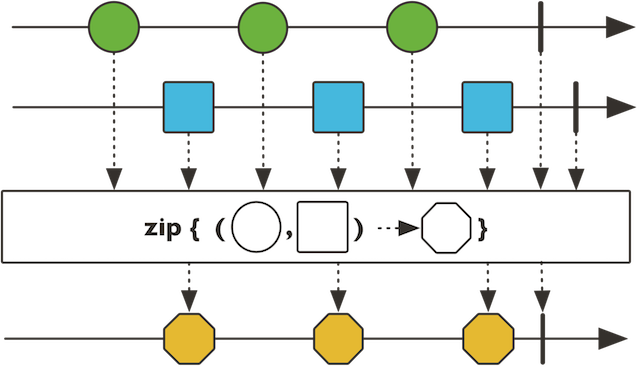

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations produced by the passed combinator function of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

the type of the input sources

the combined produced type

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

individual source request size

the Publisher array to iterate on Publisher.subscribe

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations produced by the passed combinator function of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

the type of the input sources

the combined produced type

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

the Publisher array to iterate on Publisher.subscribe

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations produced by the passed combinator function of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

The Iterable.iterator will be called on each Publisher.subscribe.

the combined produced type

the Iterable to iterate on Publisher.subscribe

the inner source request size

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations produced by the passed combinator function of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

The Iterable.iterator will be called on each Publisher.subscribe.

the combined produced type

the Iterable to iterate on Publisher.subscribe

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a zipped Flux

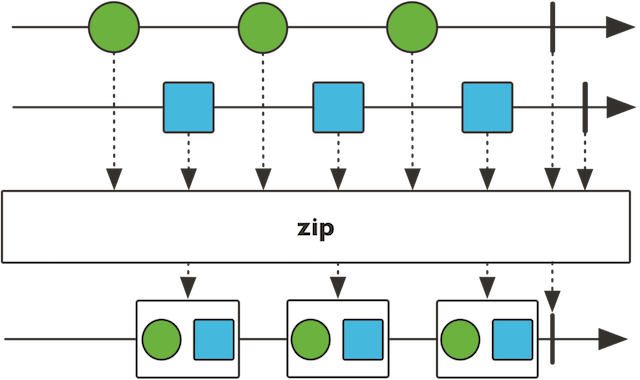

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

type of the value from source5

type of the value from source6

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

The fifth upstream Publisher to subscribe to.

The sixth upstream Publisher to subscribe to.

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

type of the value from source5

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

The fifth upstream Publisher to subscribe to.

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

type of the value from source3

type of the value from source4

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

The fourth upstream Publisher to subscribe to.

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

type of the value from source3

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The third upstream Publisher to subscribe to.

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

a zipped Flux

"Step-Merge" especially useful in Scatter-Gather scenarios.

"Step-Merge" especially useful in Scatter-Gather scenarios. The operator will forward all combinations produced by the passed combinator function of the most recent items emitted by each source until any of them completes. Errors will immediately be forwarded.

type of the value from source1

type of the value from source2

The produced output after transformation by the combinator

The first upstream Publisher to subscribe to.

The second upstream Publisher to subscribe to.

The aggregate function that will receive a unique value from each upstream and return the value to signal downstream

a zipped Flux